An ambitious path towards accident-free roads

Pearl set out on an ambitious mission to improve driving by making life with your car better through beautiful experiences. While there are many frustrations with driving on today’s roads, we sought not only to address those frustrations, but to take it much further—to tackle the dangers associated with daily driving. RearVision was the first product of what was to be part of a suite of products aimed at solving driver frustration and road safety.

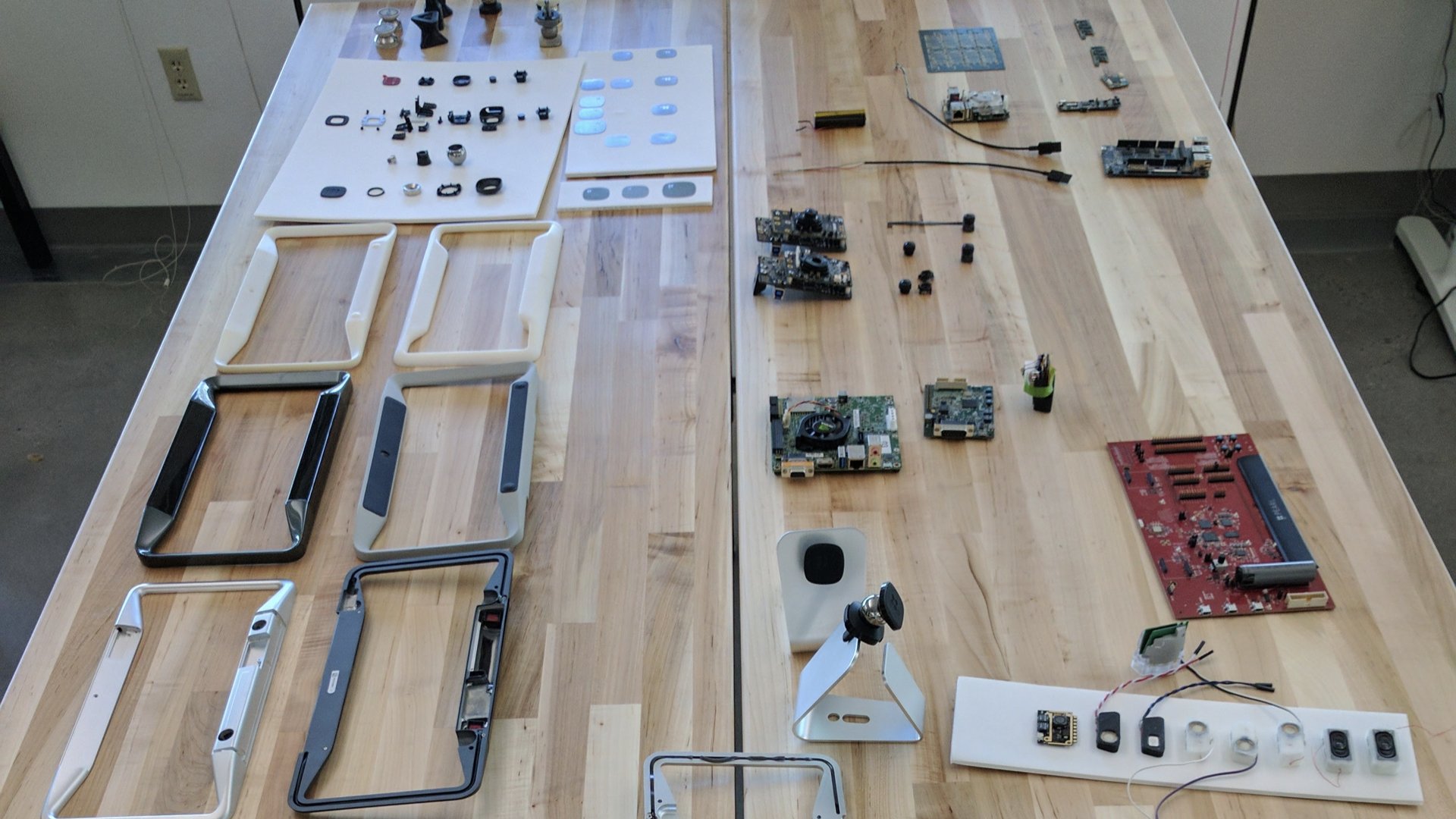

And it did it in a novel and elegant way: An aluminum uni-body structure powered through integrated solar cells, with dual-cameras that provide an 180° view, and embedded AI to alert drivers of oncoming obstacles.

The first touchpoint sets the tone

The product experience for RearVision started with the packaging. We were conscious that a piece of technology designed to be installed on the car could seem unfriendly, or daunting, to some. We spent a lot of effort on the visuals and marketing to curb this, but the unboxing and product installation is where the product experience began.

The installation process required the installer to remove their existing license plate before installing RearVision. Once removed, there were some tricky steps that could trip up installers, so we made sure instructions couldn’t be missed— instructions were attached to the product and needed to be unscrewed before installation could be started.

Installation consisted of three major steps: removing the license plate, installing the product and re-install the license plate, and installing the Car Connector into the car’s ODB port. Once those were done, users were directed to the app to bring the product to life.

A key objective for this next phase was to be as helpful as possible, and using video allowed customers to get the gist of the entire process quickly without needing to read lengthy descriptions.

Using RearVision

No UI is the best UI

Using the app needed to be simple and immediate. We focused most of our efforts on making the app easy to launch once the driver was ready for it. But because a core aspect of RearVision relied on using an Apple or Android phone, it meant we couldn’t fully control the user experience. We had to work around the iOS and Android limitations. Where ideally our app would launch the instant a connection was established with our hardware and the car was engaged in reverse, we would vend timely notifications to the lock screen as a workaround.

Once in, the app should be all about visibility, with no distractions. A minimal set of actions were presented to the user but quickly faded away to make way for the main function. The AI/ML-powered computer vision allowed additional features, including the ability to alert drivers of obstacles using visual and aural cues. And to provide a better sense of the car’s width and trajectory while parking, dynamic guide lines were super-imposed on top of the image. Unlike most parking guides today, these lines adjusted to the environment, making them feel intelligently part of the scene. Because of the ultra-wide camera, we were able to allow users to digitally pan approximately 180 degrees to either side. Impressively, this allowed drivers to peek left or right in a parking lot’s aisle as they prepared to exit their spot.

The vision beyond RearVision

The long-term goal of the platform was to produce sensors that would be placed all around the vehicle, allowing us to provide drivers a heightened sense of situational awareness and automated safety features by tapping into automobiles’ control systems.

In terms of UI, here are just a few examples of how this platform could help drivers.

Windshield HUD

Driving Directions

In the following example, the driver need not look at a screen for navigation assistance. Much like we’ve done for one another for decades, this UI uses audio to provide quick and easy driving directions by assessing the surrounding environment and identifying key landmarks or easily identifiable visual elements for the user to follow.

In another example of no (visual) UI, some situations can allow the system to assume the user’s needs without their input.

Impact

2017 winner of 6 awards, including packaging